Software development is undergoing a massive shift with the emergence of advanced artificial intelligence agents. These intelligent systems, driven by advanced models such as Large Language Models (LLMs), demand a departure from traditional development methodologies. This blog post explores the stages of this innovative development life cycle and highlights the critical importance of prioritizing safety and security to create reliable AI agents.

Types of AI Agents

1. Rule-Based Agents

Rule-based agents follow predefined rules, making them ideal for straightforward tasks with clearly defined conditions and responses. They provide consistent outcomes and are easy to implement and manage.

Example: Chatbots programmed to answer FAQs illustrate how rule-based agents handle user inquiries without human input.

2. Learning Agents

Learning agents use machine learning to improve over time by learning from data and experiences. They adapt to new information, making them ideal for tasks requiring personalization and continuous improvement.

Example: Streaming platforms like Netflix use learning agents to recommend shows based on user preferences, offering tailored content. This approach highlights the growing significance of AI agents’ development in enhancing user engagement.

3. Multi-Agent Systems

Multi-agent systems involve multiple AI agents collaborating to solve complex problems. These systems excel in distributed decision-making and problem-solving scenarios.

Example: Autonomous vehicles coordinating to optimize traffic flow show how multi-agent systems enhance safety and reduce congestion. These systems are key examples of autonomous agents in AI achieving collective goals.

The Evolving Landscape of AI Agent Development

Historically, software development adhered to a stable, rule-based, and deterministic process known as the software development life cycle (SDLC). However, AI agents introduced a new paradigm with their goal-oriented, non-deterministic nature. Unlike conventional software, these agents rely on natural language communication, generate different outputs for identical inputs, and incur significant costs for model inference, posing distinct challenges.

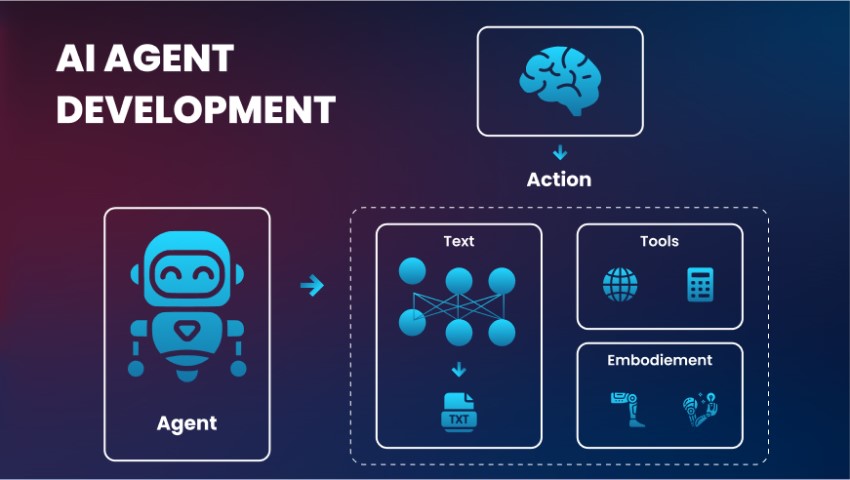

What are the Components of AI Agents?

To unlock the potential of AI agents in enterprise operations, it’s essential to understand their key components and how they function effectively:

Core Engine

At the heart of every AI agent is its core engine, which typically consists of sophisticated language models, such as large language models (LLMs) or generative AI frameworks. These engines enable AI agents to interpret unstructured data, produce human-like responses, and engage in complex interactions. The choice of engine significantly influences the agent’s performance, including its accuracy, efficiency, and cost-effectiveness.

Optimized Prompts

AI agents rely on carefully designed prompts to execute specific tasks based on predefined criteria. These task-specific templates guide the AI in delivering precise and reliable outputs. For instance, a tailored prompt helps assess whether an incoming query aligns with an organization’s escalation policies and directing tasks to specialized teams.

Data Ecosystem

AI agents derive their effectiveness from the data they process, which includes user inputs, external data feeds, and contextual knowledge. By analyzing these elements, agents deliver accurate and contextually relevant responses. Techniques like Retrieval-Augmented Generation (RAG) enhance their ability to find and apply relevant information. However, handling data in enterprise settings also requires stringent measures to ensure privacy, such as anonymization and masking, especially when sensitive information is involved.

Execution Framework

AI agents gain functionality through access to tools, APIs, and enterprise systems, enabling them to perform tasks and automate workflows. The extent of their integration determines their versatility, allowing them to move beyond basic queries to execute more complex operations. Enterprises must implement robust access controls, guardrails, and regulatory safeguards to maintain security and compliance.

Human Oversight and Governance

Integrating human-in-the-loop validation and oversight mechanisms is essential to ensure the quality, trustworthiness, and responsible use of AI agents. Transparent governance frameworks are critical to monitoring AI interactions, ensuring alignment with organizational standards, and maintaining ethical AI practices.

Key Phases in the AI Agent Development Life Cycle

1. Design

Artificial intelligence agents leverage LLM reasoning capabilities to tackle challenges creatively. The design phase involves employing a declarative programming approach to define the agent’s objectives and constraints. By establishing clear and deterministic boundaries, developers ensure that agents operate within acceptable parameters while maintaining adaptive and innovative problem-solving abilities.

Defining explicit safety and security measures is essential to prevent critical failures. These controls help maintain governance over agent actions, ensuring strict adherence to crucial business logic. Engaging in AI agent consulting during this phase can provide valuable insights for robust designs.

2. Deployment

During deployment, AI agents get packaged into fixed versions that include all dependencies, such as model versions, knowledge bases, and prompts. This practice mirrors the concept of infrastructure as code, enabling robust version management and easy rollbacks in case of issues.

Fixed versions strengthen security by providing immutable release packages. This approach minimizes risks of unauthorized changes and facilitates precise tracking and auditing of agent behavior.

3. Evaluation

Ongoing evaluation is crucial for optimizing AI agents, involving structured feedback from experts and models. Auditing interactions and incorporating feedback helps agents gradually improve their performance and reliability.

Regular testing and feedback ensure compliance with business rules and prevent harmful or abusive outputs. Prompt identification and resolution of vulnerabilities enhance overall safety. Collaboration with an AI agent development company can streamline this process by leveraging specialized expertise.

4. Validation

Validation involves creating regression tests from prior interactions to simulate and verify updates. These tests ensure updates do not introduce additional issues, safeguarding the agent’s dependability.

Thorough regression testing mitigates the risk of unintended consequences from model updates or prompt changes. This practice helps maintain the agent’s reliability and security as it grows.

Emphasizing Safety and Security in AI Agent Development

A strong emphasis on safety and security throughout the development cycle is important to build robust AI agents. Below are some key practices:

- Establish Clear Safety Measures: Define explicit boundaries for agent behavior to mitigate undesirable actions.

- Adopt Fixed Versions: Package releases with all necessary dependencies to ensure consistency and rollback capabilities.

- Integrate Continuous Feedback: Regularly evaluate agent interactions to identify and resolve issues effectively.

- Conduct Rigorous Testing: Develop comprehensive stress tests to validate agent performance and enhance resilience.

Conclusion

The rise of AI agents calls for a transformation from traditional software methodologies to a new development life cycle centered on safety and security. With AI agent consulting, teams can create powerful AI agents that are reliable and secure. Embracing innovative methods tailored for artificial intelligence agent projects helps businesses achieve scalable solutions.

As AI advances, these practices will be critical in unlocking its potential while mitigating associated risks.